Whether you're an experienced data engineer or just starting your career, you must keep learning. It's more than just knowing the basics like how to build a batch ETL pipeline or run a dbt model; you need to adopt skills based on where the field is headed, not where it currently stands.

If you want to add forward-looking skills to your resume, real-time data engineering is a great place to focus. By gaining experience with real-time data tools and technologies like Kafka, ClickHouse, Tinybird, and more, you'll develop in-demand skills to help you get that promotion, land a new gig, or lead your company to build new use cases with new technology.

If you want to add forward-looking skills to your data engineering resume, try learning how to work with real-time data.

In this blog post, you'll find 8 real-time data engineer projects - with source code - that you can deploy, iterate, and augment to develop the real-time data engineering skills that will advance your career.

But first, let's cover the basics.

What is real-time data engineering?

Real-time data engineering is the process of designing, building, and maintaining real-time data pipelines. These pipelines generally utilize streaming data platforms and real-time analytics engines and are often built to support user-facing features via real-time APIs.

While "real-time data engineering" isn't necessarily a unique discipline outside the boundaries of traditional data engineering, it represents an expanded view of what data engineers are responsible for, the technologies they must understand, and the use cases they need to support.

Real-time data engineering isn't a unique discipline, but rather an expansion of scope and skills on top of traditional data engineering.

What does a real-time data engineer do?

Real-time data engineers must be able to build high-speed data pipelines that process large volumes of streaming data in real time. In addition to the basics - SQL, Python, data warehouses, ETL/ELT, etc. - data engineers focused on real-time use cases must deeply understand streaming data platforms like Apache Kafka, stream processing engines like Apache Flink, and real-time databases like ClickHouse, Pinot, and/or Druid.

They also need to know how to publish real-time data products so that other teams within the organization (like Product and Software) can leverage real-time data for things like user-facing analytics, real-time personalization, real-time visualizations, and even anomaly detection and alerting.

What tools do real-time data engineers use?

Real-time data engineers are responsible for building end-to-end data pipelines that ingest streaming data at scale, process that data in real-time, and expose real-time data products to many concurrent users.

Real-time data engineers lean heavily on streaming data platforms, stream processing engines, and real-time OLAP databases.

As a real-time data engineer, you'll be responsible for building scalable real-time data architectures. The main tools and technologies used within these architectures are:

- Streaming Data Platforms and Message Queues. Apache Kafka reigns supreme in this category, with many managed versions (Confluent Cloud, Redpanda, Amazon MSK, etc.). In addition to Kafka, you can learn Apache Pulsar, Google Pub/Sub, Amazon Kinesis, Rabbit MQ, and even something as simple as streaming via HTTP endpoints.

- Stream Processing Engines. Stream processing involves transforming data in flight, sourcing it from a streaming data platform, and sinking it back into another stream. The most common open source stream processing engine is Apache Flink, though other tools like Decodable, Materialize, and ksqlDB can meet the need.

- Real-time OLAP databases. For most real-time analytics use cases, traditional relational databases like Postgres and MySQL won't meet the need. These databases are great for real-time transactions but struggle with analytics at scale. To be able to handle real-time analytics over streaming and historical data, you'll need to understand how to wield real-time databases like ClickHouse, Apache Pinot, and Apache Druid.

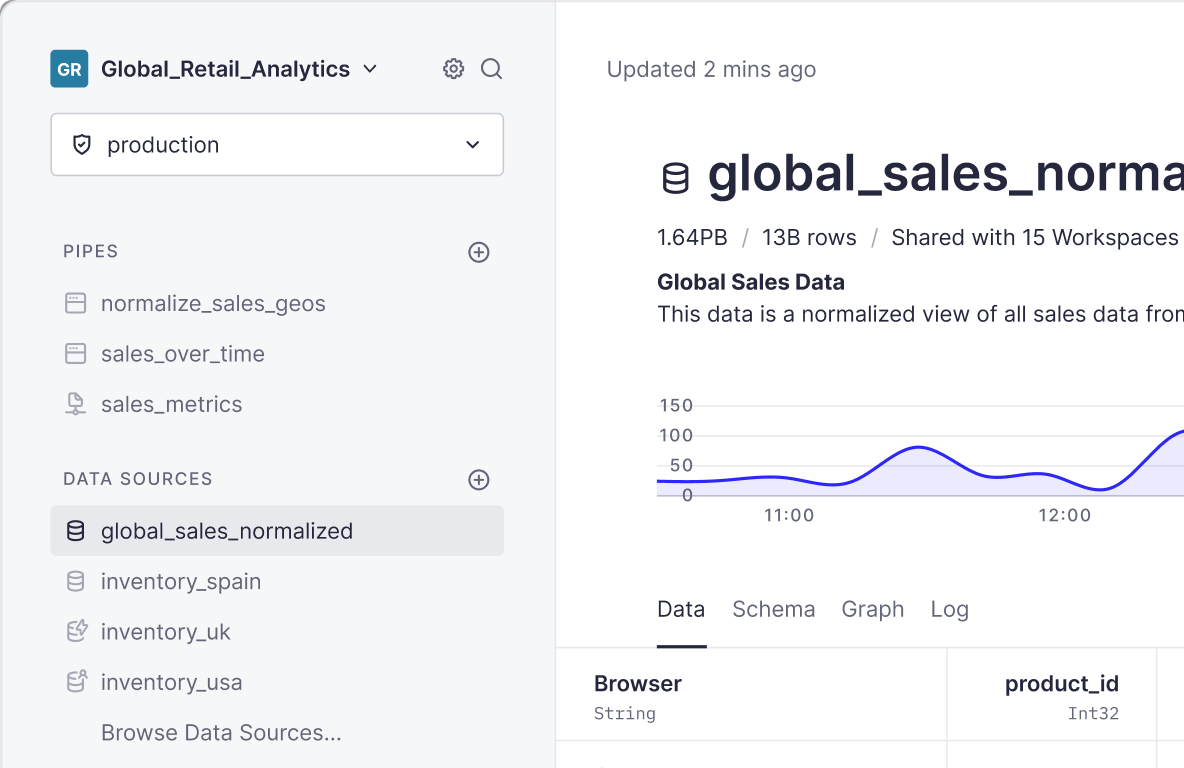

- Real-time API layers. Real-time data engineering is often applied to user-facing features, and it might fall on data engineers to build real-time data products that software developers can utilize. While API development is often the purview of backend engineers, new real-time data platforms like Tinybird empower data engineers to quickly build real-time APIs that expose the pipelines they build as standardized, documented, interoperable data products.

Below is a table that can help you understand the types of tools and technologies that you'll use for real-time data engineering that expand upon a traditional data engineering stack.

Of course, these lists aren't mutually exclusive. Data engineers are called upon to perform a wide range of data processing tasks that will invariably include tools from both of these toolsets.

| TRADITIONAL DATA ENGINEERING | REAL-TIME DATA ENGINEERING |

|---|---|

Coding Languages & Libraries

|

Streaming Data Platforms and Message Queues

|

Distributed Computing

|

Stream Processing Engines

|

Traditional Databases

|

Real-time OLAP Databases

|

Orchestration

|

Real-time Data Platforms

|

Cloud Data Warehouses/Data Lakes

|

API Development

|

Object Storage

| |

Business Intelligence

| |

Data Modeling

| |

Customer Data Platforms

|

A list of end-to-end real-time data engineering projects

Looking to get started with a real-time data engineering project? Here are 8 example projects to get you started. For each one, we've linked to various resources including blog posts, documentation, screencast, and source code.

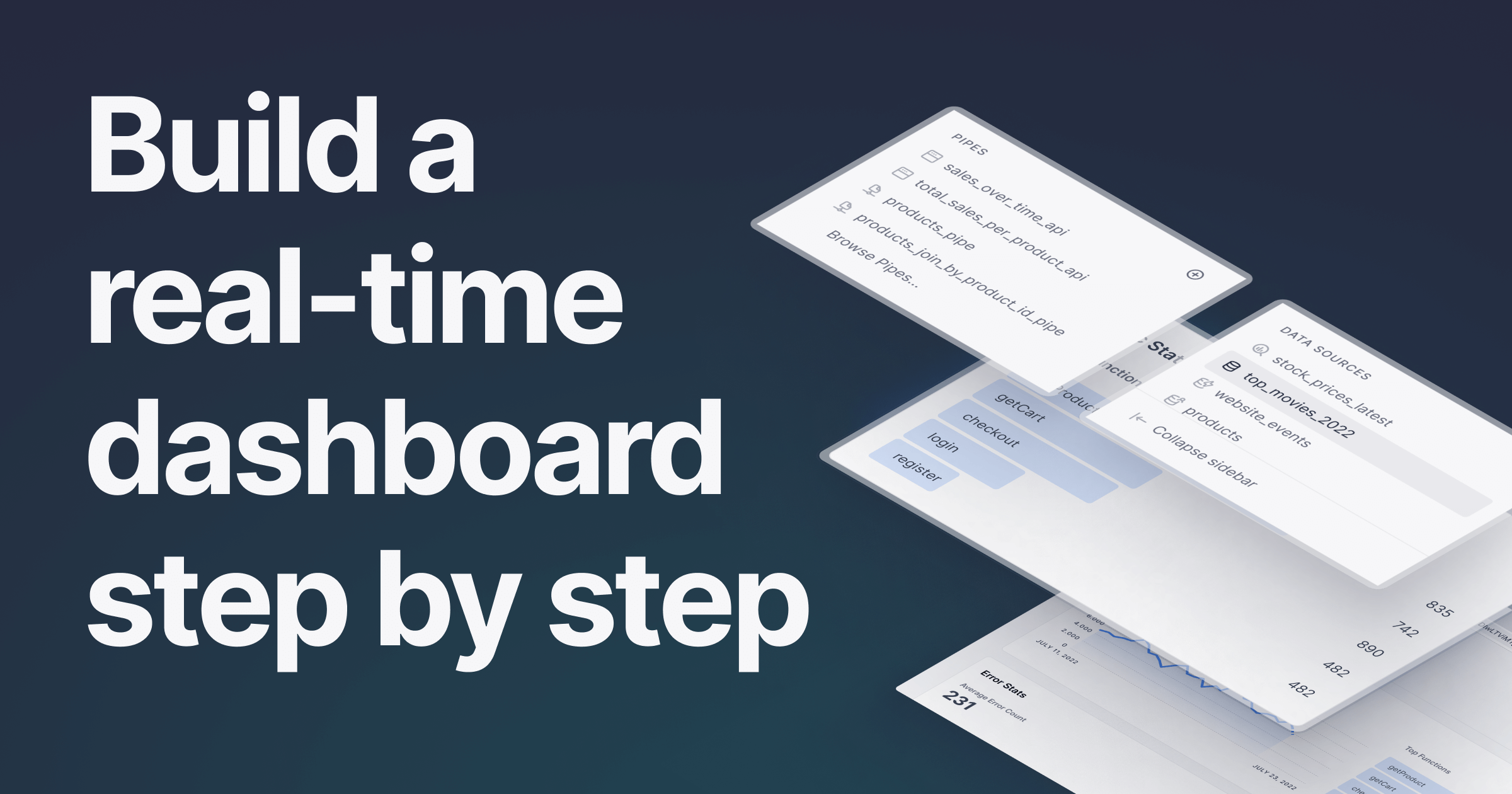

Build a real-time data analytics dashboard

Real-time dashboards are the bread and butter of real-time analytics. You capture streaming data, build transformation pipelines, and build visualization layers that display live, updating metrics. These dashboards may be for internal, operational intelligence use cases, or they may be for user-facing analytics.

Here are some real-time dashboarding projects you can build:

- Build a real-time dashboard with Tinybird, Tremor, and Next.js

- Build a real-time Python dashboard with Tinybird and Dash

- Build a real-time web analytics dashboard

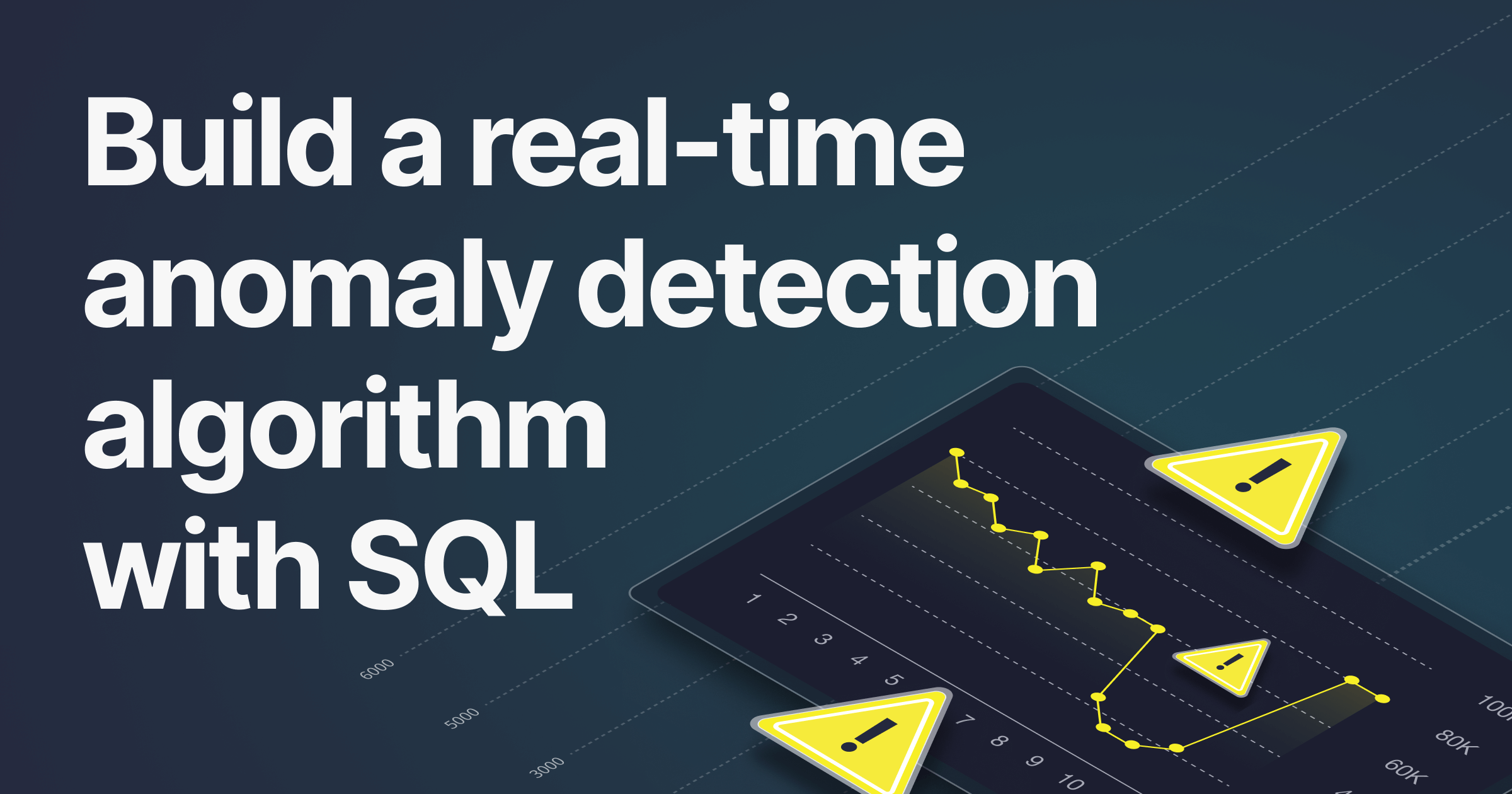

Build a real-time anomaly detection system

Anomaly detection is a perfect use case for real-time data engineering. You need to be able to capture streaming data from software logs or IoT sensors, process that data in real time, and generate alerts through systems like Grafana or Datadog.

Here is a real-time anomaly detection project you can build:

- Build a real-time anomaly detector

- Use Python and SQL to detect anomalies with fitted models

- Create custom alerts with simple SQL, Tinybird, and UptimeRobot

Build a website with real-time personalization

Real-time personalization is a common application for real-time data engineering. In this use case, you're building a data pipeline that analyzes real-time web clickstreams from product users, comparing that data against historical trends, and providing an interface (such as an API) to provide a recommended or personalized offer to the user in real time.

Here's a real-time personalization project that you can build:

- Build a real-time personalized eCommerce website

Build a real-time fraud detection system

Fraud detection is classic real-time analytics. You must capture streaming transaction events, process them, and produce a fraud determination - all in a couple of seconds or less.

Here's an example real-time fraud detection project you can build:

- How to build a real-time fraud detection system

Build an IoT analytics system with Tinybird

IoT sensors produce tons of time series data. Many real-time data engineers will be tasked with analyzing and processing that data for operational intelligence and automation.

Here's an example IoT analytics project for you to build:

- Build a complete IoT backend with Tinybird and Redpanda

- Live Coding Session

- GitHub Repo (1 and 2)

Build a real-time API layer over a data warehouse

Cloud data warehouses are still the central hub of most modern data stacks, but they're often too slow for user-facing analytics. To enable real-time data over a cloud data warehouse, you need to export it to a real-time data store.

Here's an example of building a real-time dashboard over BigQuery by first exporting the data to Tinybird:

- Build a real-time dashboard over BigQuery with Tinybird, Next.js, and Tremor

- Build a real-time speed layer over Snowflake

Build a real-time event sourcing system

Event sourcing is classic real-time data engineering. Rather than maintain state in a traditional database, you can use event sourcing principles to reconstruct state from an events stream. Event sourcing has a number of advantages, so it's a great project for aspiring real-time data engineers.

Here's an example event-sourcing project:

- A practical example of event sourcing with Apache Kafka and Tinybird

- Blog Post (with code)

Build a real-time CDC pipeline

Change data capture shouldn't be new to most data engineers, but it can be used as a part of a real-time, event-driven architecture to perform real-time analytics or trigger other downstream workflows.

Here are some example real-time change data capture pipelines you can build for three different databases: