These are 10 of the principles of DataOps that we make available to data teams.

While we always challenge our assumptions, this new paradigm guides the way we are building Tinybird, deeply focused on simplicity, speed and developer experience.

1. The Data Project

This is like your framework (think of MVC for web development). It describes how your data should be stored, processed, and exposed through APIs.

You put there your know-how, abstractions and the focus on the problem you are solving.

2. Serialization

All your table schemas, transformations and endpoints need to be serializable. Keep the format simple for humans to read and write. If possible, map every resource to a text file.

3. Version Control

This is a consequence of having a data project and a file format. Treat your data project as regular source code, and push it to a source version control system (e.g. git)

From there you get traceability over changes, collaboration, peer reviews, automations and the very same workflow and best practices you are used to as a development team.

4. Continuous Integration and Deployment

The key for most of the things developers do is speed. Innovation is iteration and if you are fast you can learn faster and iterate faster.

This means making an assumption, running an experiment, learn and repeat, ensuring quality.

Your system should allow you to easily create testing environments and use fixtures, so you can build, test and measure your data pipelines and endpoints on every change.

5. Lead Time

- Deploying to production should be seconds (not minutes or hours)

- Fixing a bug should be minutes (not hours or days)

- Developing a new feature should be hours (not days or weeks)

6. Data Quality Assurance

So you are a data engineer working for a Fortune 500 company, are you confident enough (or even allowed) to make a change in any of your data pipelines and push it to production right away in a matter of minutes?

How do you ensure data quality in your data product?

Data needs tests, even more than code.

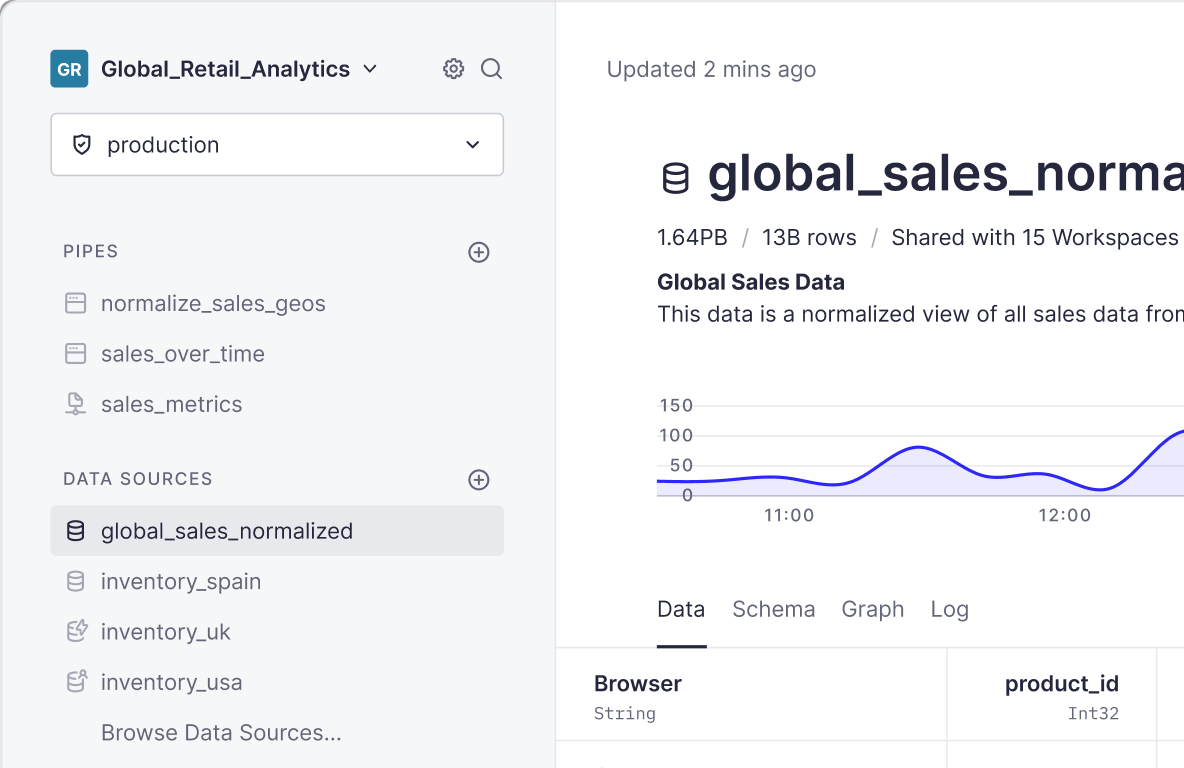

7. Tools

Pick tools based on your goals, but as a starting point, your tools should make it easy for your team to access, share, and analyze data.

You should be able to work from your terminal or IDE without leaving your data project context.

Avoid steep learning curves, use a familiar syntax, short and clear so you can run and automate stuff quickly.

When it comes to data exploration and problem-solution discovery, you need instant feedback.

8. Observability

You need to run and understand your data in production and quickly learn if it's solving your business problems.

Automate health checks, monitor performance, allow runtime traceability and implement an alerting system.

9. Recipes and building blocks

The data development experience should be as close as the experience you actually have working with some library that you will import and use in any language.

Your analysis should be idempotent, composable and immutable. Wrap them and make them reusable right away.

Wrap your analyses into reusable data projects.

10. Fine Tuning

Query optimization is a never ending process. You should monitor queries and transformations to build a system that helps fine tuning your data products.

Bonus track: publication and documentation

Data and development teams work together. Your data are exposed as auto-documented APIs, so it can be integrated anywhere.

Don't forget about not so cool tools such as spreadsheets and traditional BI.

What are your main challenges when dealing with large quantities of data? Tell us about them and get started solving them with Tinybird right away.