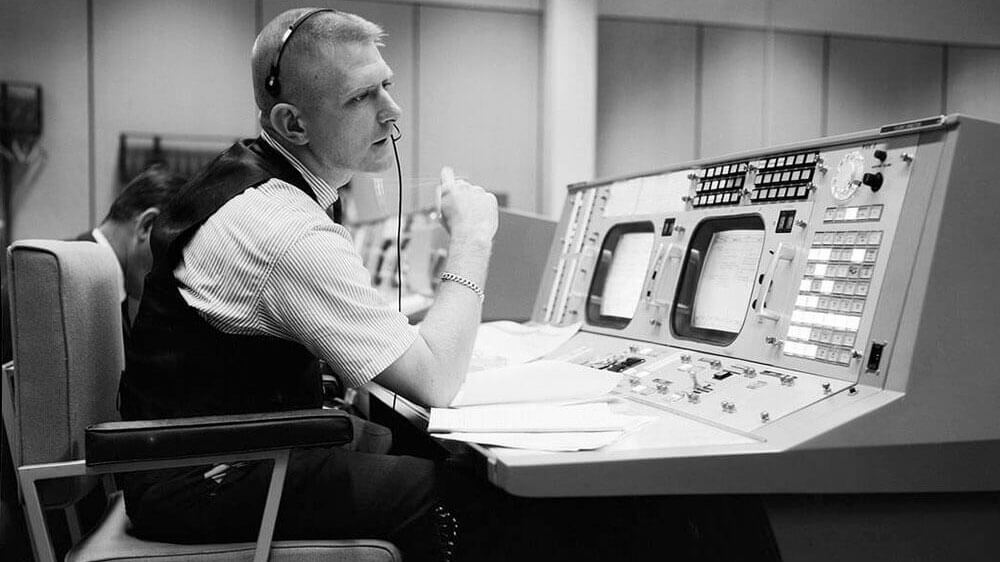

NASA Official: “I know what the problems are Henry, this could be the worst disaster NASA has ever experienced.”

Gene Kranz (Flight Director, Apollo 13): “With all due respect, sir, I believe this is going to be our finest hour.”

From the movie Apollo 13

When you operate a technical platform, incidents are bound to happen eventually. And while at Tinybird we work really hard to prevent them from happening, we also know that part of providing an outstanding level of service is to react quickly, accurately and professionally when they do inevitably hit.

Recently we had downtime that affected one of our biggest customers’ dedicated infrastructure that we manage as part of our service; this post is about what happened and how we reacted to it.

A bit of context

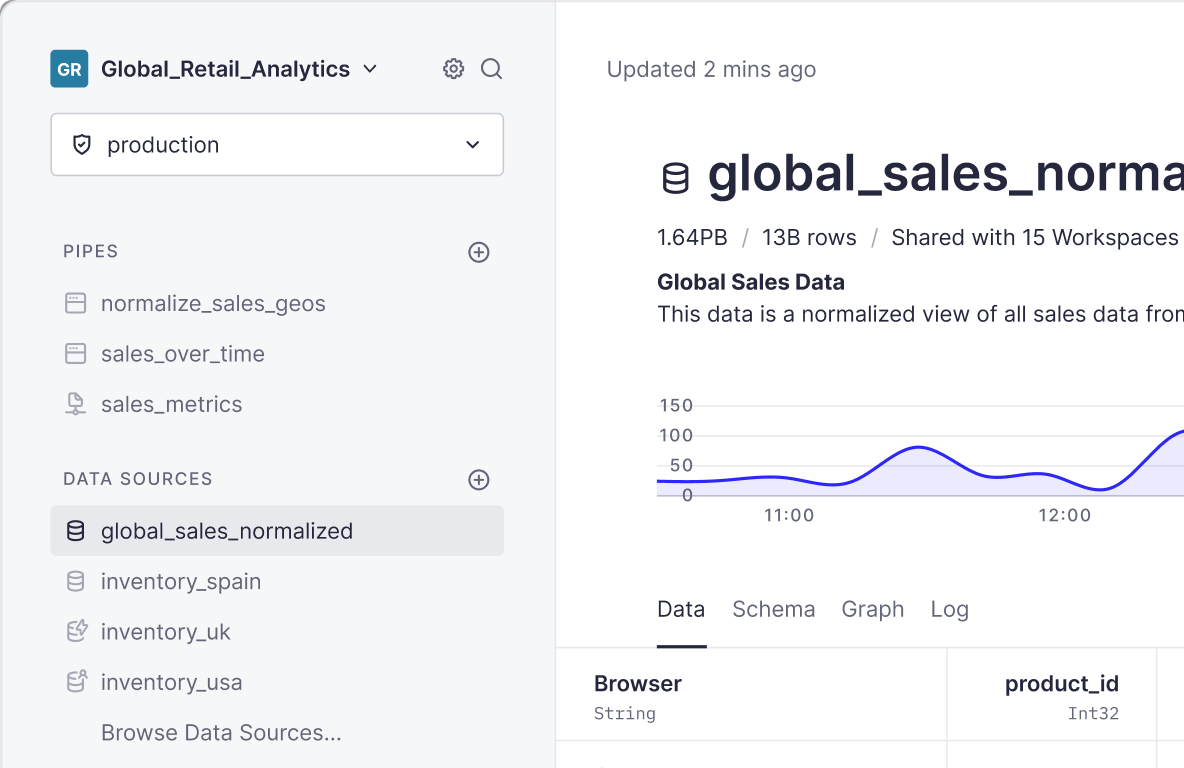

When we provide managed and dedicated infrastructure for a given customer, we design it to be highly scalable and highly available. In this particular case, we have SLAs for both uptime and performance, guaranteeing sub-second queries against:

- a constantly growing dataset (currently in the high hundreds of millions of records)

- with concurrency between 20 to 80 requests per second.

If we don’t meet our SLA, we pay service credits, which means we deduct a pre-agreed amount of money from the following’s month invoice.

Also, we want our service to “just scale”, such that our users never have to worry about how many CPU cores they need in order to manage their current load. We just want it to scale as needed and for our customers to marvel at how fast the Tinybird always flies :-)

To achieve that, we have improved both our failover capabilities as well as our infrastructure’s burstability to ensure that:

- in the event that one of our many replicated Clickhouse database servers running in parallel malfunctions or crashes, it fails gracefully with little impact to the service.

- we can easily add more replicas when the load increases beyond certain thresholds.

The incident

On December 19th we were (for the first time for this particular customer) taking advantage of our new capacity to add and remove replicas automatically. Suddenly, all hell broke loose.

Our first mistake was not to schedule a maintenance window with our customer to do this: the reality is that we didn’t even consider it at the moment because we had no reason to doubt that it would work. Why didn’t we doubt it? Because we had tested it several times before in other environments and also in this particular customer’s staging environment.

But we were wrong. A few weeks before we had decided for consistency reasons to change all the data paths (the name of the folder where the actual data is stored on disk) across all of our infrastructure. But it turns out we had changed it everywhere except on this particular customer’s production infrastructure: our second mistake - a pretty basic but serious one.

So when we went ahead and deployed the configuration change in the production environment, all the database servers received a new/different data path directory than the one where the data was. Thus, the incident started.

Our monitoring automatically reported the alert: all queries were suddenly failing. Of course! The database server had started using a data path where there were no active databases: the servers were up and running and accepting requests, but all of them were failing.

For a few initial minutes it wasn’t clear what was going on; the deployment of the change had finished successfully and the servers looked fine. Where was the data?

Fortunately we had our best people on the job and we were able to solve it fairly quickly. Here is how we reacted.

Our reaction

First, since we didn’t yet know the cause and there was a chance that there could be data loss, we decided to call our main contact at our customer's right away.

What we essentially said was “Hey, we wanted to let you know that we have a situation: the service is currently down and we are actively working to resolve it. There is a possibility we may have to restore data back-ups and/or ask you to re-ingest some of the data. No need to do anything on your side yet, we will keep you posted”

Our customers had already noticed, so it was great that we called them first.

If we have learnt something over the years is that, when shit hits the fan, there is nowhere to hide and keeping your customers well informed of progress is always your best move.

It only took our team a few minutes to figure out what was going on but, given it affected all database servers in the cluster, it required some time to fix them all. Service came back gradually and it was fully restored after 28 painful minutes.

We again notified our customers to explain and promised an incident report, which we sent later that same day.

The Incident Report

We include below only some parts of the incident report because it contains sensitive information, but enough to get an idea of how it looked like:

Our incident reports always have four sections: Summary, Resolution and recovery timeline, Root Cause, Corrective and Preventive Measures

1. Summary

A brief description of both the disruption and the incident. It literally read:

2. Resolution and recovery timeline

A minute-by-minute account of what happened, what we knew or didn’t at each point in time and actions we took accordingly. Here is an excerpt:

3. Root cause

We pretty much have already explained the root cause in this post. In this section of the report we explained in detail what caused it in the first place, why this particular cluster was not configured properly, etc.

4. Corrective and preventive measures

Here we delved into what actions we would take to both (a) prevent a similar incident from happening in the future and to (b) what kinds of things we can do for the system to “fail better” if certain similar situations were to ever arise again.

For instance, as part of this incident we realised that our load balancer health checks were just checking if the database servers were ‘available’ (essentially, just “up”), but not ‘functionally available’. This meant that requests kept being sent to the database servers even though no data was being returned. We have since updated the health checks to take that into account.

Additionally and thanks to this particular incident, we discovered a bug in clickhouse that we sent a patch for and it is in the process of getting fixed.

Every single service disruption is really painful for us in more than one way: not only do they make us feel like we are providing less than ideal service to our customers when they happen, they can also cost us money (as mentioned above, we pay service credits if we do not meet our SLA).

However, incidents are also a great source of learning and improvement; when they do happen, we react to the very best of our ability so that we can at least be proud of how we handle the whole situation.

We hope this was useful. Drop us a line and let us know what you think.